The Most Overlooked Goldmine in Scraping: Public Files Hiding in Plain Sight

Most scraping targets web pages: product listings, blogs, directories. That’s common and useful, but still surface-level. The real value often sits deeper, inside files. PDFs, Excel sheets, Word docs, and presentations. They’re public, linked on websites or indexed by Google, yet rarely collected at scale.

These files contain the details companies don’t polish away. Numbers, contracts, technical specs. Data that can create a real advantage when discovered and analyzed in volume.

With Hexomatic’s Files & Documents Finder, you can use Google operators or crawl domains to systematically uncover and extract this layer of hidden information.

Why Files Are Different

Files usually contain:

Raw numbers and terms instead of marketing copy

Tables, clauses, and disclosures you won’t find on a webpage

Data that bypasses traditional scrapers because it’s not HTML

This is where the truth lives.

Three Ways to Scrape Files at Scale

Most of the overlooked value in scraping comes down to how you find and collect the files before extracting data. There are three main approaches:

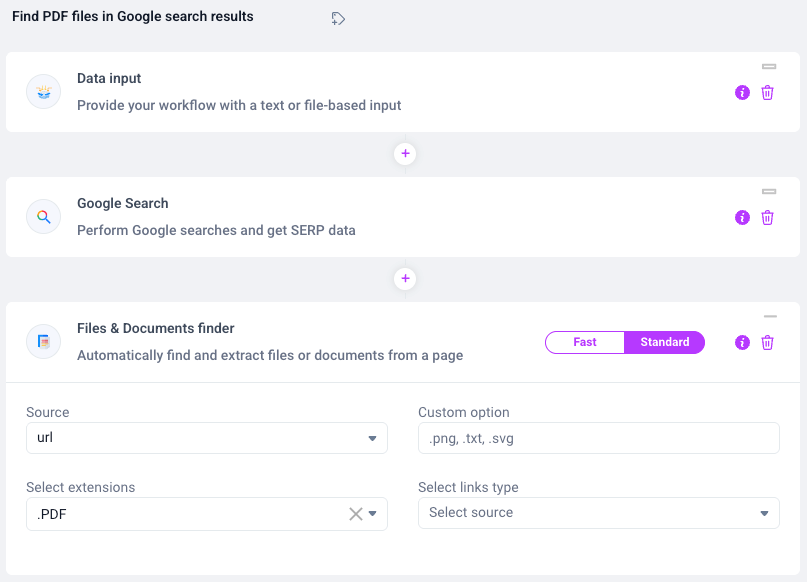

1. Search with Google Operators

You can use advanced queries to uncover files directly from Google. For example, run searches like:

filetype:pdf pricing site:competitor.com

"safety data sheet" filetype:pdf site:.gov

filetype:xlsx contract site:.org

Workflow: Feed a list of keywords into Hexomatic’s Google Search automation → analyze each result page for files → extract PDFs, Excel sheets, or Word docs → process with parsing/OCR → export to Sheets.

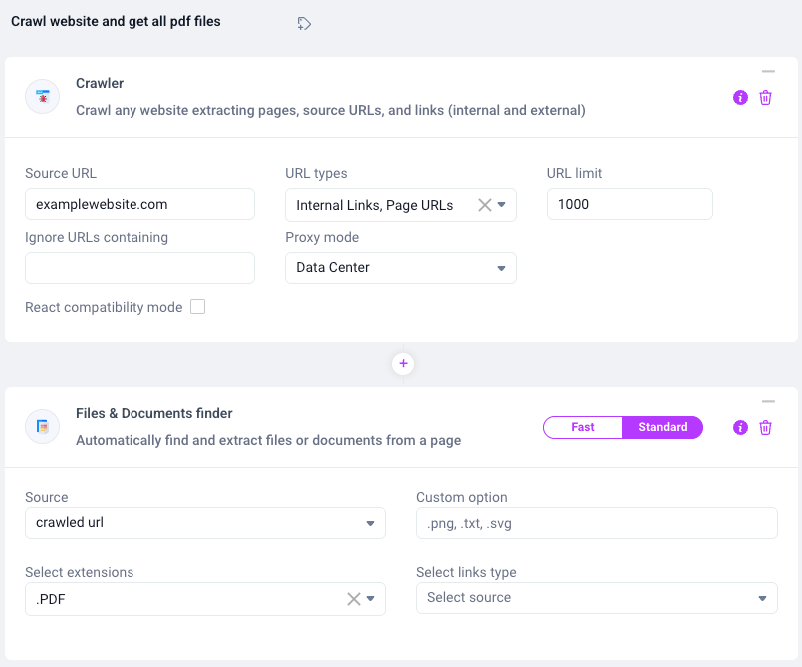

2. Crawl a Website for Files

If you already know the site you want to monitor, crawling is faster than search. This lets you uncover files that are linked but don’t appear in Google results.

Workflow: Crawl the target site → detect all linked files by type (PDF, DOCX, XLSX, PPTX) → extract text/tables with Hexomatic’s parsing tools → run AI or keyword filters → export results.

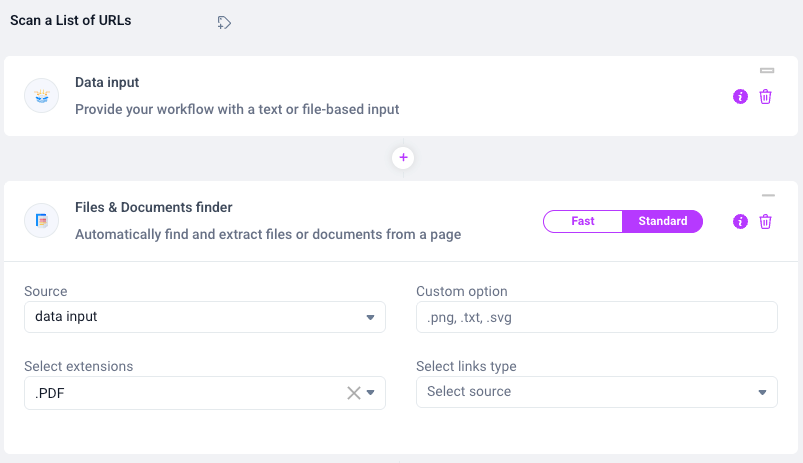

3. Scan a List of URLs

Sometimes you already have the sources. Think of regulators that publish hundreds of links, or a list of supplier portals. In that case, you can run a batch scan.

Workflow: Import your list of URLs into Hexomatic → scan each one for file types you specify → download or parse the files → centralize results in a database or CRM.

Use case examples: monitoring regulator URLs for legal settlements, auditing supplier sites for certifications, scanning research institutes for new publications.

The Strategic Edge

Scraping files gives you access to information your competitors ignore. You get contract terms, raw numbers, and technical data while they’re still chasing product pages.

Hexomatic makes it simple:

Search with Google dork operators at scale

Crawl domains for hidden files

Extract tables and text with OCR and parsing tools

Send results into Sheets, CRMs, or AI workflows

Next Step

If you want this set up for your business, book our Concierge Service. We’ll build a tailored file-scraping workflow so you can start collecting insights without wasting time on setup.

The files are already public. The only difference is who’s using them.